Client: YouTube

My Roles: UX Researcher

Pictorial and textual representations play an important role in decision making for a given selection task. "Which one gets more attention on a video sharing service and by how much?", is the question we answer through our eye-tracking study.

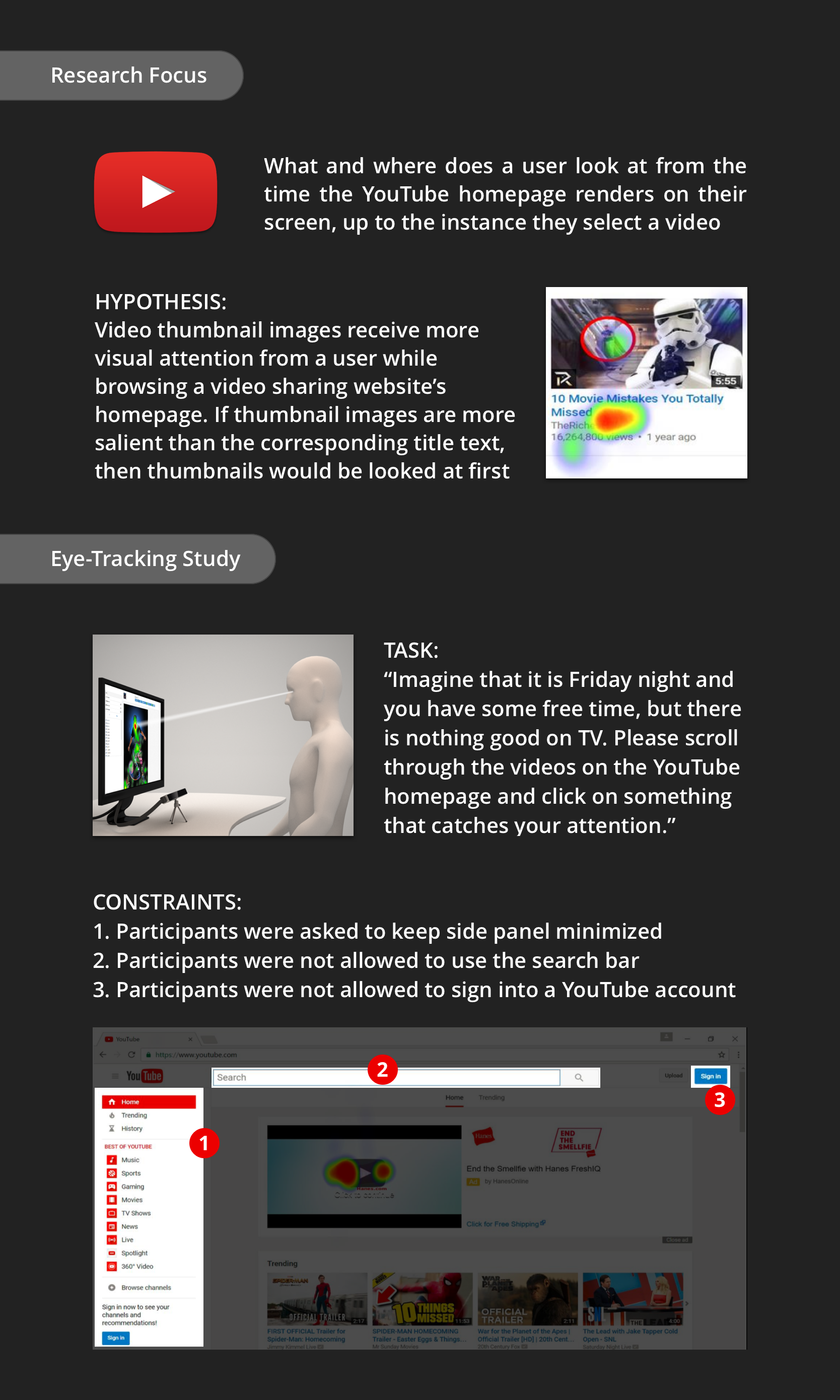

Conduct an IRB approved eye-tracking study to analyze and understand user's visual attention while browsing YouTube's homepage.

The study was designed to focus on the user's visual attention on the different elements of the YouTube homepage, that play a role in video selection. In order to do so, we laid out the guidelines for our study with some constraints.

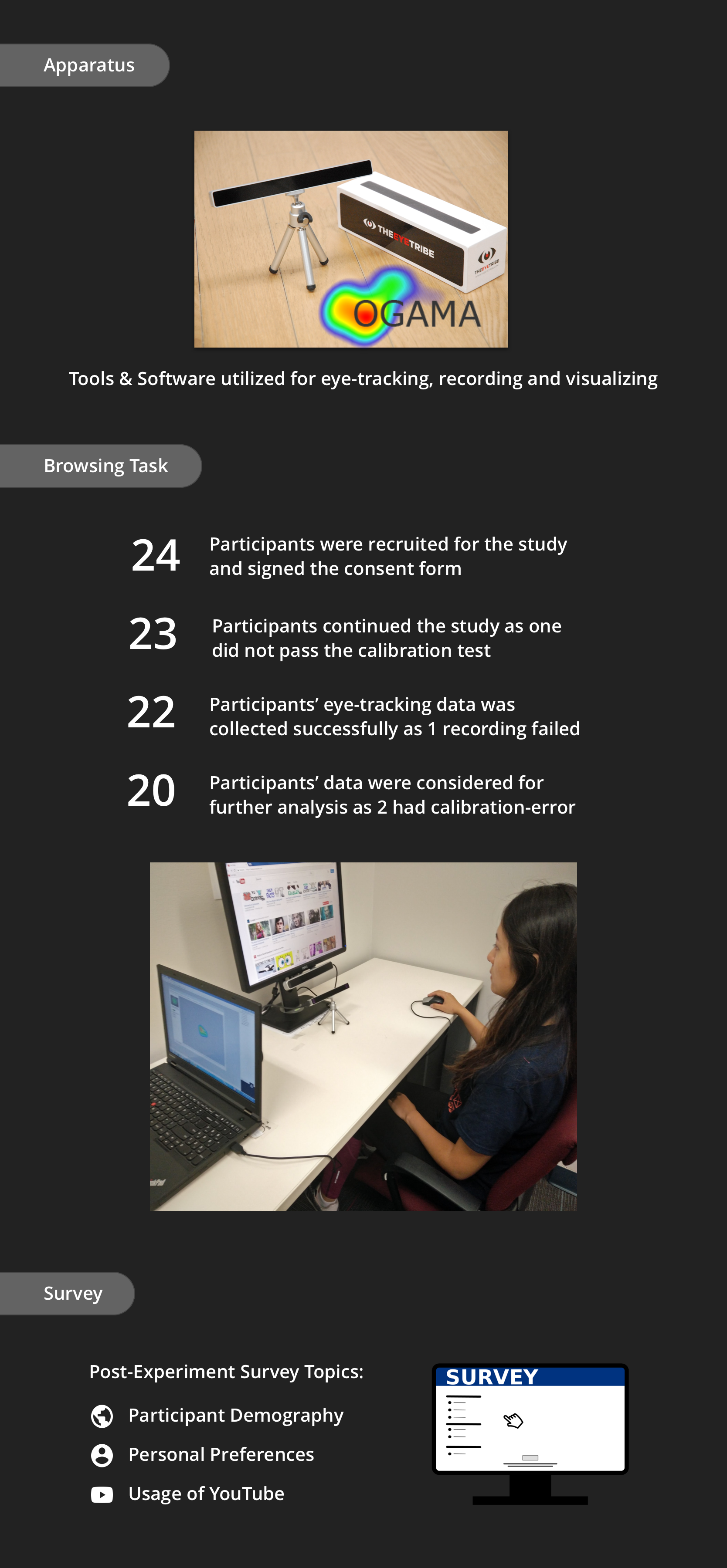

Once the participants signed the consent form, we started the experiment by calibrating their eye-movement within the range of the eye-tracker. OGAMA allowed us to record and visualize the eye-tracking data.

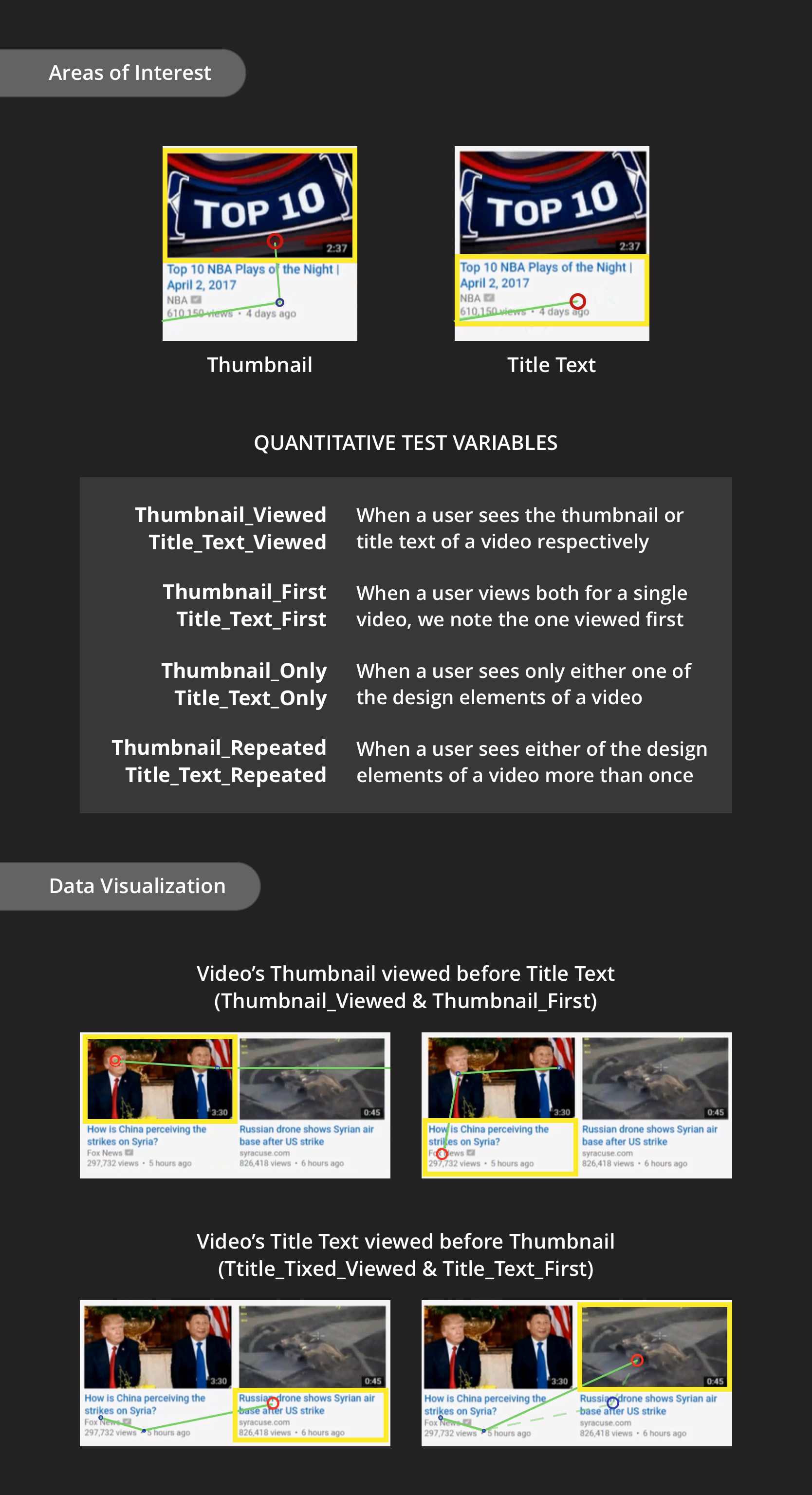

We considered one browsing session as a Trail which is defined as the recording session from the time the YouTube homepage loads to the time the participant makes a video selection. We observed and noted the eye-fixations (red and blue circles) with the saccades (green lines) per trail to understand their visual attention.

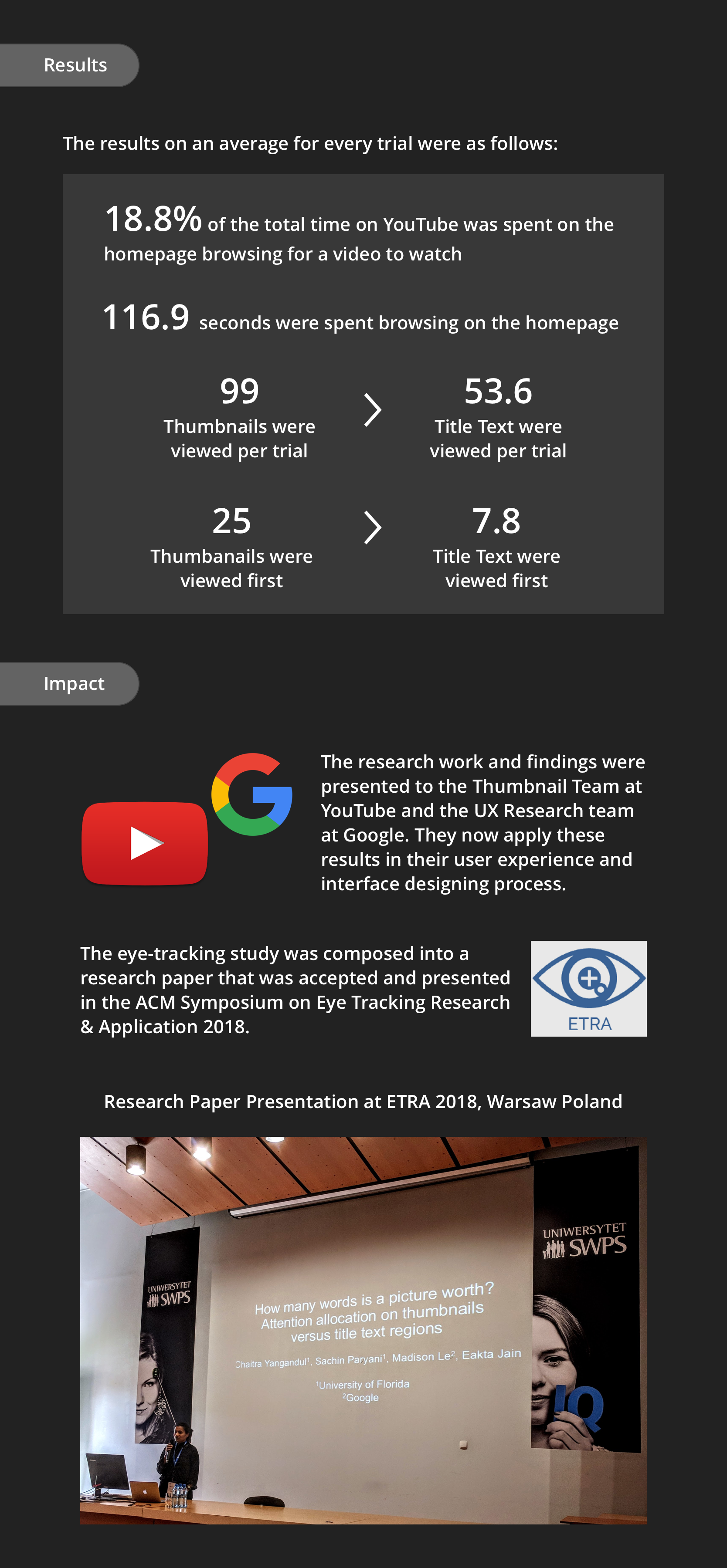

Based on the results, we concluded that a video's thumbnail received almost twice as much as attention than its title text. We can also state that users looked at thumbnails before title texts overwhelmingly. We confirmed our results with Two-Tailed Paired Samples T-Test. Hence, our hypothesis holds true.

Official Project Website

I received the opportunity of collaborating and getting great feedback from the brightest minds at Google and YouTube design team. My research mentor, Dr. Eakta Jain, discussed this project during their talk about “Perceptual Cues for Social Platforms” at Google Tech Talks.